Editor's Note: The introduction of VMware VSAN into the marketplace has vast implications for hyperconvergence and software-defined storage sectors. We've invited the leading players in converged infrastructure and software-defined storage solutions to comment on the marketplace as they now see it and how their solutions are positioned going forward. In the first in this commentary series, Scale Computing CEO Jeff Ready explains the hyperconverged solution space as they currently see it.

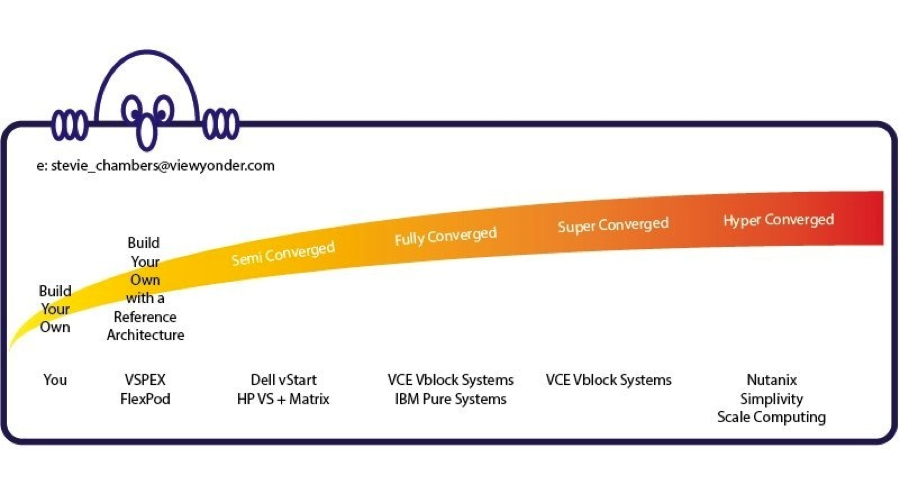

In mid-2012, Steve Chambers, former CTO of VCE published what he called the Infrastructure Continuum. It was one of the first times that the moniker “Hyper Converged” was published to classify the new group of startups converging the hypervisor, storage and servers in a single stack.

“A Rose Hyper Convergence by Any Other Name…”

This graph was published around the same time that analysts like the Tenaja Group were calling “HyperConvergence” the next generation of Virtualization. Analysts and new vendors to the space such as Nutanix, Simplivity and our very own Scale Computing latched on to the new classification of Hyper Convergence as a concise way to distinguish our products as a group from the crowded marketplace. “See that do-it-yourself virtualization model that everyone has traditionally deployed for high availability? Hyper Convergence takes out the complexity.” It was easy to understand and gave customers a term to articulate the trend to their peers. You could find use of the term in marketing collateral from each of our companies, in analysts reports, blog posts, etc., and before you knew it, “Hyper Convergence” was real.

Not all Hyper Converged Architectures are Created Equal

The recent VMware Virtual SAN (VSAN) announcement has brought a lot of attention to the Hyper Convergence space and the need to distinguish our products from the crowded marketplace continues. This time though, it isn’t from the market as a whole, but from the existing hyper-converged products and newer entrants claiming “Hyper Convergence” based on the buzz of the term and not the definition of combining servers, storage and virtualization into a single stack.

Wikibon has taken the first stab at this with their Server SAN Market Landscape segmentation of Appliance Solutions (Nutanix, Simplivity, Scale, etc.), Software-only Solutions (VSAN, StoreVirtual VSA, etc.) and Data Systems (Ceph, OpenStack, AWS, etc.), but there is another distinction that is overlooked in this view of the market.

So, what is the best way to differentiate? It is in the architectural design decision to integrate the storage within the hypervisor (think HC3 with KVM, or VSAN with vSphere) vs. the storage in a VSA model sitting on top of the hypervisor (think Nutanix or Simplivity).

Why does it matter? There are a lot of differences between the products in each of these sub-categories of Hyper Convergence (and I’m sure there will be plenty of company generated material focusing on these differences), but this one design decision automatically separates the herd at a very fundamental level: Simplicity. Simplicity in terms of both the IO path from the guest to the disk and back, but also the simplicity in removing the administration of a legacy SAN/NAS layer.

“A designer knows he has achieved perfection not when there is nothing left to add, but when there is nothing left to take away.”

-- Antoine de Saint-Exupery

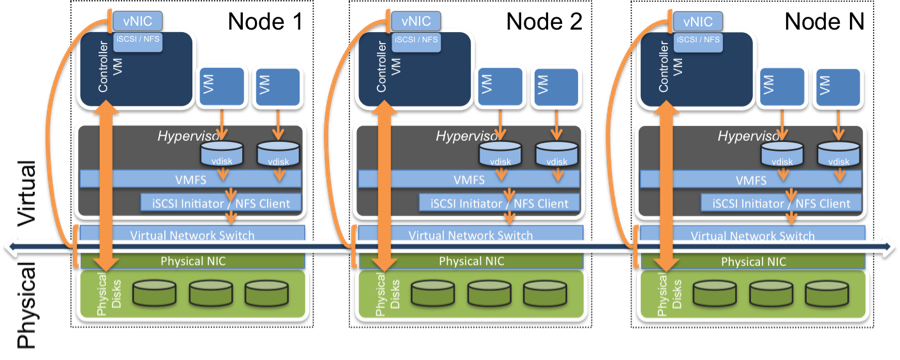

The complexity added to the IO path is clear when viewing the architecture of the VSA model. In this approach, a Controller VM sits above the hypervisor to present the local storage of the server to the rest of the cluster.

Walking through the I/O path of a 4K write you’ll notice that each IO must pass through the hypervisor at least twice:

- Guest VM does a 4K block I/O to emulated or paravirtualized disk device

- That device is backed by a VMDK file that represents a virtual disk

- VMFS file system translates the VMDK block I/O to file system semantics storing data in 1MB blocks

- VMFS uses VMware Storage Drivers to execute the storage commands on a logical disk (LUN) sitting out on the controller VM (also known as a Virtual Storage Appliance or VSA). To do this it has to use SAN or NAS storage protocols such as iSCSI or NFS. Each I/O results in network storage protocol requests and responses being sent to the Controller VM just like they would be sent to an external SAN or NAS device

- The Controller VM generates I/O requests to multiple redundancy sets, possibly again grouping multiple I/O’s into various size stripes across the array going back through a virtual switch on the hypervisor

- For the local I/O to be written to disk on each recipient server, it is then sent back through the hypervisor where the blocks are physically written to the disk spindles

- Then the disks have to acknowledge the writes back to the controller VM, back up through the hypervisor again

- The controller VM finally can acknowledge the write through the iSCSI target or NFS server and back to the network to the initiator

- The iSCSI initiator tells the hypervisor which updates the VMFS and VMDK metadata and ultimately acknowledges the writes back to the originating VM

In addition to the extra steps the I/O must traverse, there is also no benefit of shared memory as seen in those products that have integrated within the hypervisor. Above, every one of these steps involves copying data in memory from one location to another, passing around the “payload” along with metadata and control “bits” that are continuously transformed and modified as the request is passed from one layer to the next.

For the IT generalist who is constantly being asked to do more with less (generic I know, but fitting in today’s climate), the added layer of administration required by the VSA model also presents a challenge. This is arguably more important than the technical details around the IO path that we just walked through because it impacts the day-to-day life of the user. So, what is the added complexity? A virtual SAN (VSA) is not fundamentally any different or easier to manage than a physical SAN. By integrating in the hypervisor, we are eliminating the need for traditional storage protocols like iSCSI and NFS. That means no more biblical debates on which storage protocol is right for the guest VMs and their applications (Can I run Exchange on NFS?). It also means no more RAID sets, iSCSI targets or LUNs, initiators, multi-pathing, storage security, zoning or fabric…to setup or manage. For most mid-market companies, the added complexity of a SAN/NAS (whether physical or virtual) is a huge barrier to implementing and managing high availability for their virtual environment.

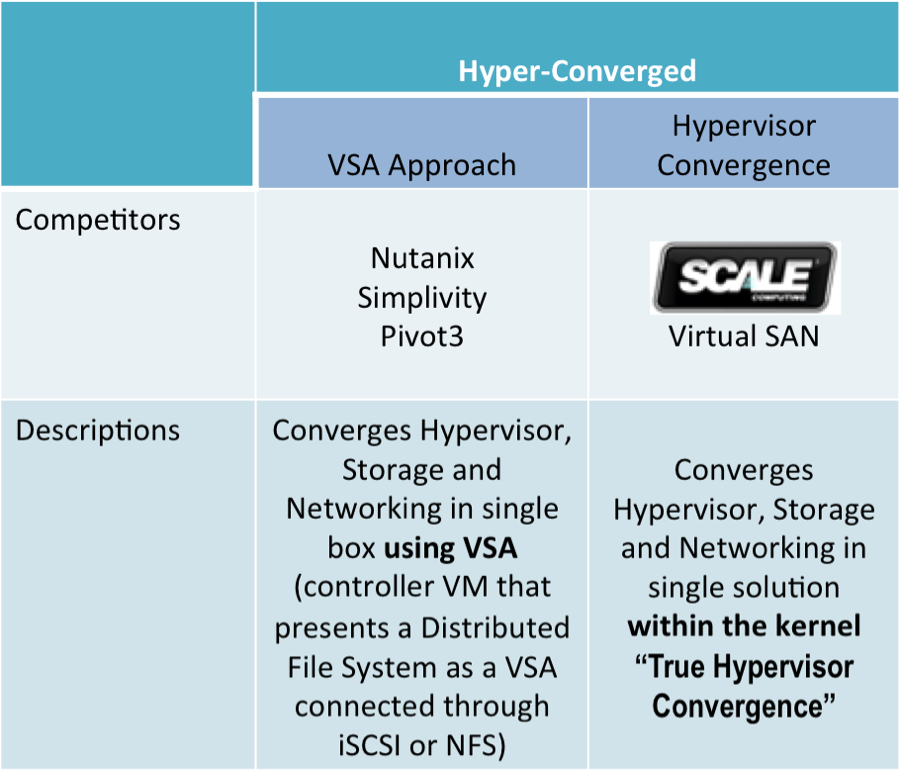

I’ll leave it to the analysts to define the terms, but I believe it is this architectural distinction that will rightfully separate the Hyper Converged products in the market. Using the terms “VSA Approach” and “Hypervisor Convergence” in the chart below you’ll see the major players in the market as it stands.