Designing the Next Generation vSphere Storage Systems

Today, most enterprises are using traditional storage arrays (NAS or SAN) with spinning disk from a big name storage company like EMC or NetApp. In fact, I would bet that the vast majority of those companies have even bought new storage arrays, of the same type, from the same big name storage companies in the past year.

Unfortunately, too many of those enterprises (and even too many of the VARs) have never taken the time to learn about the next generation storage solutions that are now available. These storage solutions all have one thing in common – they use flash to offer impressive performance. However, not all of them are completely redesigned.

Today, I visited Coho Data and listened to Founder and Chief Architect, Andy Warfield speak (video is below). Andy was one of the authors of the Xen Server hypervisor, specifically related to storage virtualization. He’s brought that knowledge of virtualization and storage to Coho Data where they have scratched all past storage array designs and started fresh.

(the complete list of Coho Data videos from Virtualization Field Day 3 are located here)

What Does Coho Data Offer?

Coho Data started off looking to fill the storage need for many web companies who started off using public clouds, like Amazon Web Services (AWS), and where their usage and bills started to skyrocket. Those companies then looked to create their own hosted datacenter but designing, planing, and managing enterprise storage was foreign to them. They especially weren’t used to estimating and planning their storage on a 3-5 year cycle, as most enterprises do. They had consumed storage “in the cloud” as an infinite and fast resource where you pay for consumption. Coho wanted to build an on-demand scaleable storage system for the enterprise that like you would consume in a cloud environments.

Here are some quotes from Andy when asked what Coho Data is:

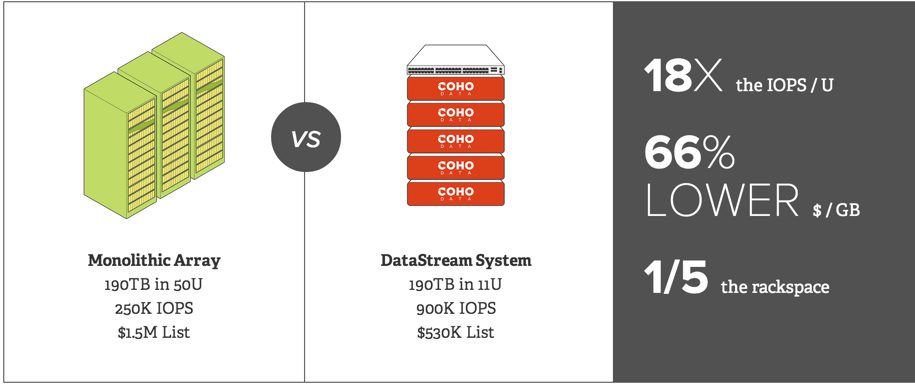

“we sell performance-dense, cost-effective and completely scalable storage”

“we build software and package it on commodity hardware”

“you grow the storage as you need it”

Coming from the virtualization side of things, their innovative and completely re-designed storage solution, which GA’ed today, has become a fantastic solution for vSphere storage.

How Does Coho Data Work?

Coho Data is both a storage and network virtualization solution and, thus, is a “converged” offering – but not a convergence of compute and storage. Coho says that the convergence of storage and networking make much more sense because of the raw performance and dynamic flexibility it can provide.

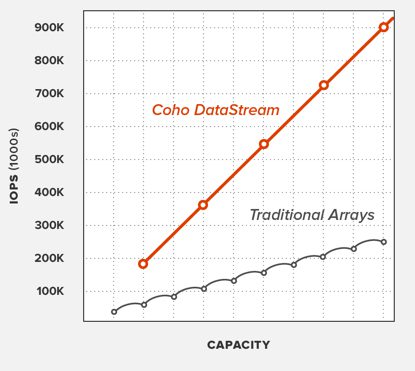

Today, Coho serves up NFS to vSphere hosts off of the Supermicro 2u Twin and Arista SDN switch that they use as their hardware platform. The hosts see the array as a single NFS datastore. The NFS requests are intelligently routed by the software in the switch to the right disk array to serve them. Each array has 4 x 10GB ports and, because coho designed their solution for performance, they can achieve with 2 x 2U units plus a 1U switch (giving you 20TB usable and 3.2 TB PCIe flash over 40 GBbit/s of access bandwidth) = 180K IOPS.

With 4U of storage and a 1U switch, (giving you 80TB of usable storage, 12.8TB PCIe flash, and 160Gbit/s of bandwidth) up to 720K IOPS.

Why Hybrid and Not All-Flash?

As Andy said, any solution that uses flash has a great struggle in trying to find a way to use ALL of the performance of that flash and ensure that you get the most out of your investment. Too many solutions allow you to put SSD into a traditional drive slot but then cannot take advantage of that performance.

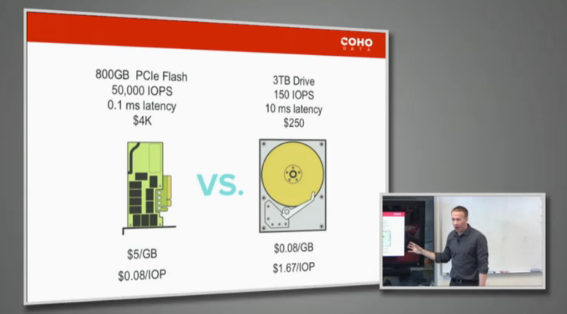

Coho has run extensive tests. What they found is that an Intel PCIe flash costs $5/GB which provides $.08/IOP (given an 800GB flash, providing 50K IOPS, at 0.1 latency, and costing $4K). On the other side, a standard 3TB drive costs $.08/GB which provides $1.67/IOP (given 150 IOPS, 10ms latency, at a $250 price). Thus, using all-flash for every application is tough to justify.

Then their question was, should the flash be in the server or be connected across the network? Also, what should the queue depth be? What they found was that with the network only adding 40 microseconds of RTT latency, unless you are running high frequency trading (HFT), they having flash across the network is not a problem.

As for the queue depth, if you go direct to PCIe flash with a queue depth of 1, you’ll have 133 microseconds of latency and be able to push 6,708 IOPS. If you push the queue depth up to 32, you’ll increase latency to 235 microseconds but be able to push 134,538 IOPS to the disk – WOW!

They did all of this testing to try to provide the most value and performance possible over a traditional array-

What Cohodata does it to combine that high performance flash with poor performance spinning disk and serve it up through a network layer using NFS. It’s connected via an Arista SDN switch that supports open flow so that the NFS requests can be programmatically sent to the right storage.

For more information checkout the following-

- Scott Lowe’s post – Don’t Let Raw Storage Metrics Solely Drive Your Buying Decision

- Chris Wahl’s post – Coho Data Brings SDN to SDS for a Killer Combination

- Eric Wright’s post – Coho-ly Moly this is a cool product!

And, of course, the Coho Data homepage